What Is Retrieval-Augmented Generation (RAG)?

In the rapidly evolving field of vascular surgery, integrating artificial intelligence (AI) into clinical practice has opened new avenues for enhancing patient care, surgical decision-making, and medical education. Among the AI technologies making significant impacts is Retrieval-Augmented Generation (RAG), which offers unique capabilities tailored specifically to the complexities faced by vascular surgeons.

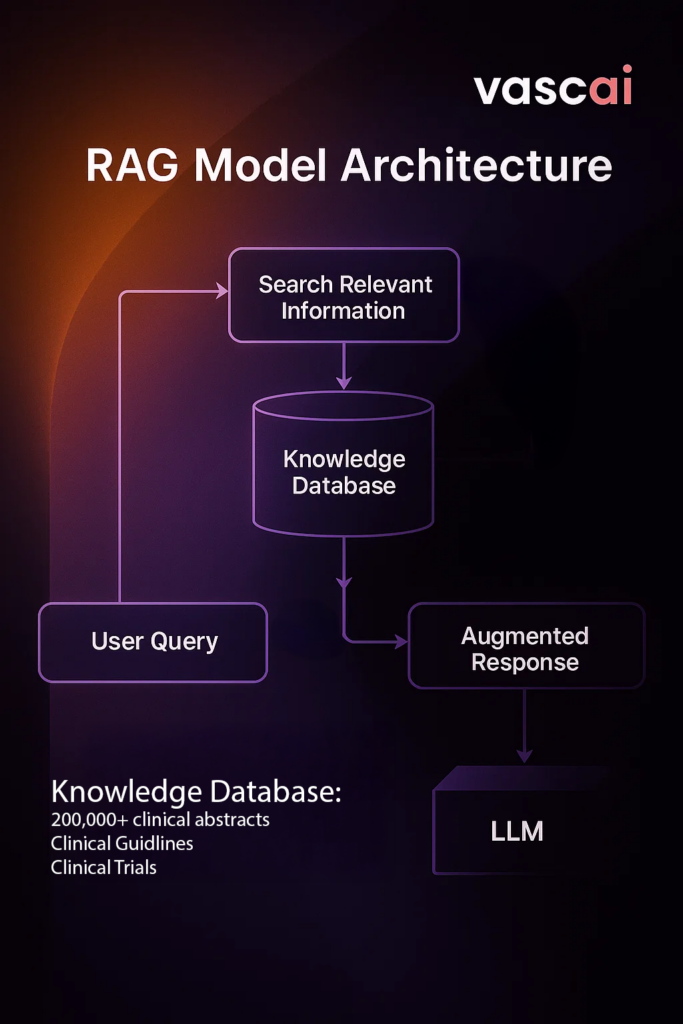

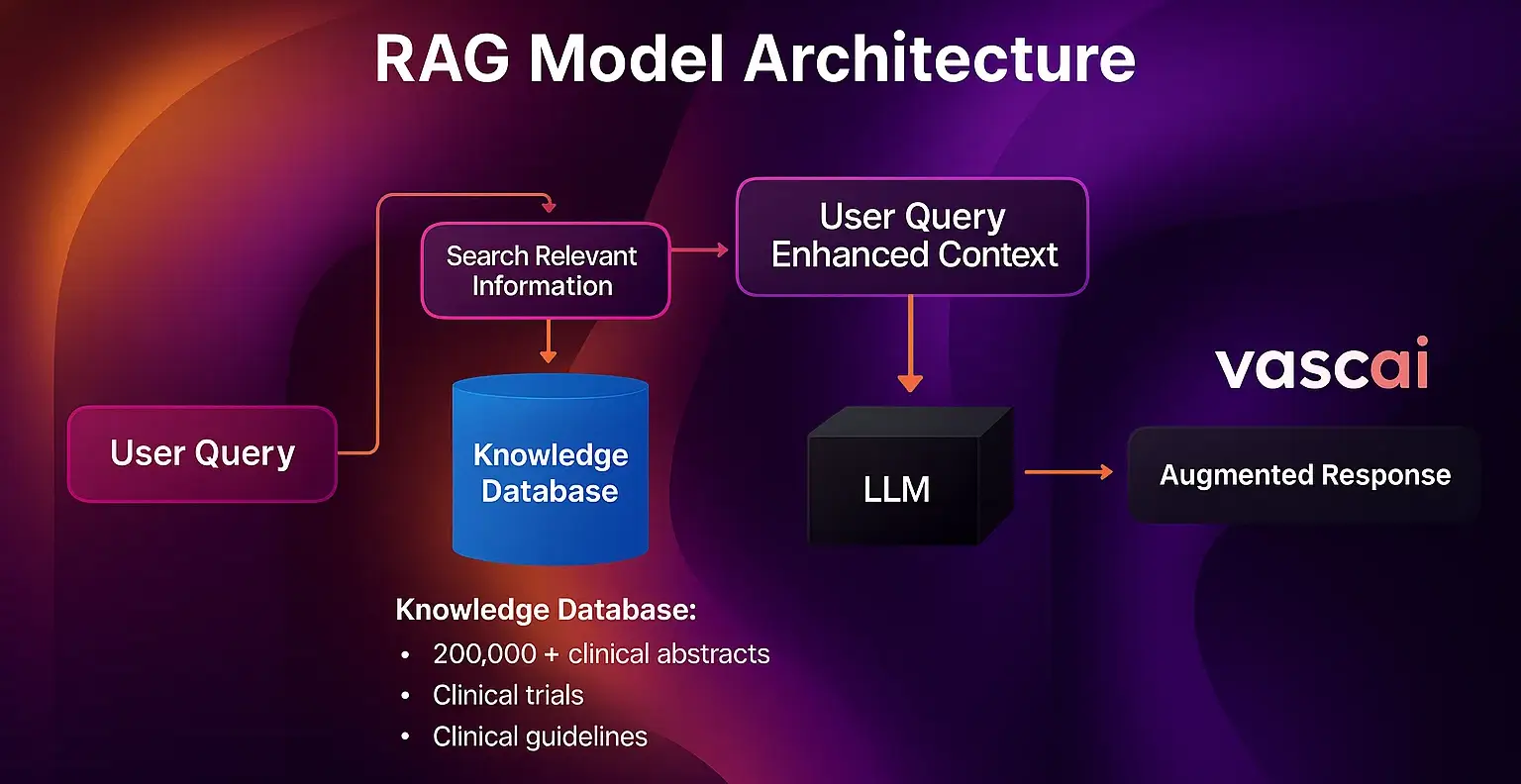

Retrieval-Augmented Generation (RAG) combines the power of large language models (LLMs) with external knowledge bases to deliver precise, contextually relevant information. Unlike standard LLMs, which rely solely on static pretraining, RAG models actively retrieve information from an external corpus—such as clinical guidelines, research papers, or patient records—at the time of the query. This retrieval step brings in fresh, authoritative data which the model uses to construct well-grounded responses.

The RAG architecture consists of two primary modules: a retriever and a generator. The retriever identifies relevant documents from a vast, indexed knowledge base using similarity search techniques (e.g., dense vector embeddings). These documents are then passed to the generator, typically a transformer-based LLM, which integrates the retrieved content into a coherent and informed response. This architecture not only enhances the factual accuracy of outputs but also enables source attribution, allowing users to verify information with original citations.

A typical RAG system comprises four primary components:

Knowledge Base: An external repository containing domain-specific data, such as clinical guidelines, research papers, and patient records.

Retriever: An AI model that searches the knowledge base to fetch relevant information based on the user’s query.

Integration Layer: This component coordinates the interaction between the retriever and the generator, ensuring seamless data flow and processing.

Generator: A generative AI model that synthesizes the retrieved information with the user’s query to produce a coherent and contextually relevant response.

This architecture allows the AI system to provide responses grounded in the most current and relevant data, enhancing accuracy and reliability.

VASC.AI: A Case Study in Vascular Surgery

To rigorously evaluate the utility of such platforms in a domain-specific context, the AI-ASCEND Benchmark—Artificial Intelligence – Assessment of Standards, Compliance, Efficacy, Navigation, & Decision-Making—was developed. This benchmark was designed to assess AI systems on real-world, complex aortic cases across five distinct domains, Accuracy of Differential Diagnosis, Thoroughness of Workup Suggestions, Clarity in Medical Optimization plan, Relevance of Treatment Options, Overall Usefulness in Decision-Making.

Each model was tested on 35 standardized clinical scenarios involving thoracic and abdominal aortic aneurysms, aortic dissections, occlusive disease, and complex complications like aortoenteric fistula. Both open surgical and endovascular approaches were included in the scenarios. Responses were independently graded by vascular surgery experts using the 5-point rubric for each domain.

Statistical analyses, including one-way ANOVA and pairwise t-tests, were performed to identify significant differences between the platforms, with significance assumed at P<0.05.

VASC.AI’s Superior Performance: Evidence from the AI-ASCEND Benchmark

In a comparative study of VASC.AI versus leading general-purpose models (ChatGPT-4o, Gemini, and Copilot), VASC.AI significantly outperformed all others in every domain (p < 0.0001). Specifically, VASC.AI achieved the highest mean scores across the board.

In a comparative study of VASC.AI versus leading general-purpose models (ChatGPT-4o, Gemini, and Copilot), VASC.AI significantly outperformed all others in every category, achieving statistically superior performance (p < 0.0001 for most comparisons).

These findings underscore how RAG-powered tools outperform generic LLMs by delivering structured, clinically actionable insights with traceable citations —bridging the gap between complex theory and bedside application. VASC.AI’s diagnostic performance, particularly in ambiguous cases requiring nuanced clinical reasoning, highlights the advantage of specialized training data and domain-specific knowledge integration.

The Impact of Specialized AI in Vascular Surgery

Beyond clinical decision-making, RAG enables equitable access to cutting-edge vascular knowledge, particularly in under-resourced healthcare settings. This democratization of expertise can help level the playing field, improve outcomes, and accelerate education globally. By providing detailed rationales and authoritative citations, VASC.AI serves as a valuable tool for guiding both diagnostic and therapeutic decisions and enhances educational opportunities.

The study (pending publication) highlights the critical need for domain-specific AI tools tailored to the unique challenges of vascular surgery. While general-purpose models may suffice for simpler cases, their inability to align recommendations with specialized clinical needs underscores their limitations in addressing the complexities of this field.

Beyond clinical decision-making, RAG enables equitable access to cutting-edge vascular knowledge, particularly in under-resourced healthcare settings. This democratization of expertise can help level the playing field, improve outcomes, and accelerate education globally.

In summary, the deployment of Retrieval-Augmented Generation (RAG) in vascular surgery—validated through real-world benchmarking like AI-ASCEND—represents a transformative advancement. With continued refinement, such platforms could redefine surgical planning, medical education, and global access to high-quality vascular care.